With the absurd growth of Fake News and disinformation campaigns, a new danger has just joined the growing list of problems – or, depending on how it’s used, the resource can be extremely useful in many cases.

With the absurd growth of Fake News and disinformation campaigns, a new danger has just joined the growing list of problems – or, depending on how it’s used, the resource can be extremely useful in many cases.

This is a Deep fake, software that uses machine learning to edit words spoken in a video, either by deleting them or transforming them into others, all in a frighteningly natural way.

Researchers at Stanford University, along with scientists at the Max Planck Institute for Informatics at Princeton University and also at Adobe Research showed how it is possible to edit what is said on the video and create altered copies of the content

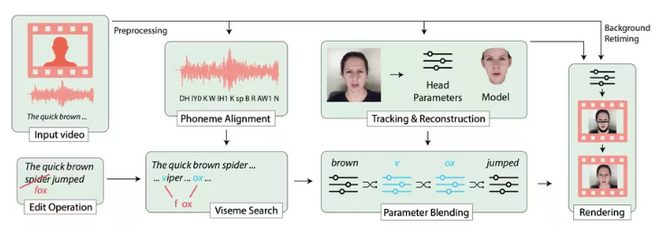

To get the perfect result, the media needs to go through several processes, as described in the video above.

The original material is analyzed, phonemes are isolated and then combined with facial expressions allied to the sounds produced – after all, a 3D model of the mouth, chin, and teeth part are produced.

With all the data combined, you can edit the text transcript of the video, producing completely new material and, worst of all, it can hardly be identified as a change.

In laboratory tests, for example, 60% of participants in a group of 138 people were able to detect some kind of alteration – the problem is that they had already been warned that the test was a study of video editing, which may have “contaminated” part of the result.

Although the modified material is indeed frighteningly real for it to be produced, however, there are some limitations.

Such Algorithms can only work on videos where the focus is absolutely on the face, and in addition, need 40 minutes of data to produce a satisfactory result.

The edited speech can also not be very different from the original, after all, we are talking about a synthetic speech that uses phonemes previously mentioned to be constructed.

The good news is that, at least for now, it is not yet possible to change the pitch of the voice as well as the mood of the subject in the video – but we need to take into account that the technology is still beginning to be explored.

We can not rule out the destructive potential of Deep fake and the dangerous consequences it could have if it were exploited maliciously.

Recommended: E3 2019: ‘Watch Dogs: Legion’ lets you play as anyone in futuristic post-Brexit London

To alleviate tensions a bit, researchers ask that videos with this type of editing be clearly identified, either through a logo or through the context itself, but we know that those who act with bad intentions will hardly respect such requests.

In the end, a Deep fake can also be exploited in a useful and creative way, aiding in the editing of video without, for example, parts needing re-filming, which would save many resources on several occasions.